For the past year, I’ve been a vocal advocate for embracing AI, even in the face of fear that it often invokes in higher education. My concern comes when AI becomes so seamless and integrated that it becomes almost indistinguishable as to when I’m using it.

Using AI requires a conscious action on our part

Currently, utilizing AI requires a conscious effort on our part. To access it, I need to proactively open my browser and navigate to chat.openai.com, or, when using Bing, I must actively select the copilot feature by clicking its button on the right side of the screen. Essentially, interaction with AI is initiated by a conscious action to engage with the tool.

My fear is when AI and human content becomes indistinguishable

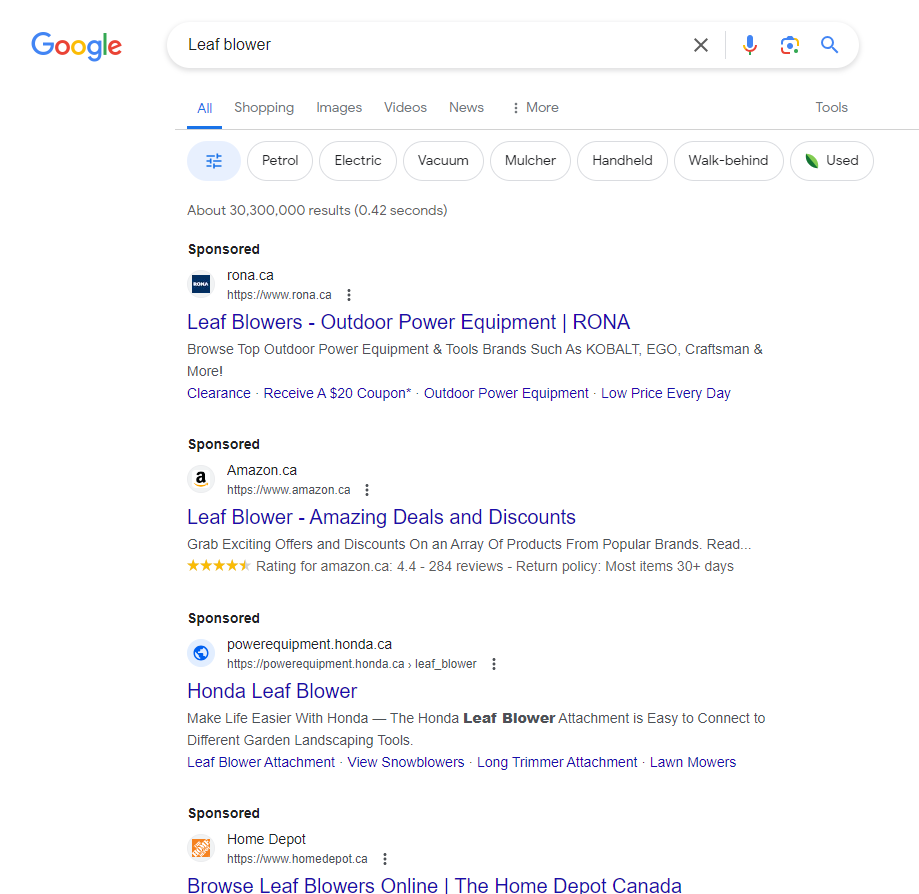

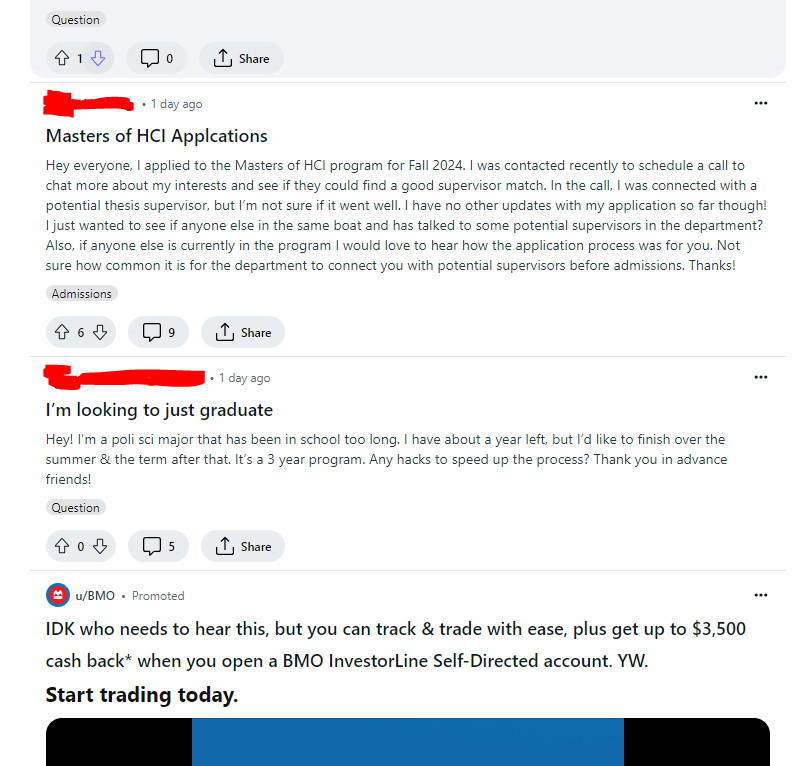

My fears will grow when we, as users, lose the ability to distinguish our use of generative AI. I anticipate this boundary blurring sooner rather than later, as technological advancements amplify and these tools undergo further refinement. I recall reading last year about Google’s plans to integrate generative AI content into its search results, a move that will likely further the challenge of differentiating between AI-generated and human-created content. This mirrors the trend of companies increasingly obscuring the lines between advertisements, sponsored links, and organic content.

The generative AI crawl

There might already be terminology for this concept, but my thoughts on user interface and user experience have led me to what I’ve termed “generative AI crawl“—the visual manifestation of AI-generated content in action. To illustrate, I’ve observed two examples. The first involves ChatGPT, where the process of generation is visible. Similarly, Gemini demonstrates this phenomenon, as does Copilot, creating the impression that content is being dynamically produced by the tool.

Examples of generative AI crawl

Visual cues such as these help unmask the indistinguishable nature of human-computer content.

What will happen when computers achieve the capability to generate text instantaneously? It raises the question: Will regulators mandate a visible indication of AI at work, this idea of generative AI crawl, even when processing no longer necessitates it? I contend that regardless of processing necessities, there should be a mandate for some form of visual representation whenever information is being produced by an AI model. Essentially, a visual cue to signal AI’s involvement in the information’s creation. A visual cue such as this will help limit the indistinguishable nature of human-computer created content.

Last summer, I watched Sam Altman’s testimony before the U.S. Congress, which was part of a comprehensive hearing on artificial intelligence. During his appearance, Altman notably urged governmental bodies to implement regulations on AI technology to ensure that this technology is safely developed. At first, I was astonished—rarely, if ever, had I witnessed a CEO openly request regulatory oversight for their own industry. However, upon reflection, I’ve come to understand the significance of such a stance. Governments indeed bear a responsibility to safeguard their citizens against the potential risks associated with generative AI technologies.

In that same Congressional hearing, Christina Montgomery, IBM’s Chief Privacy and Trust Officer suggests that companies developing or utilizing AI systems “be transparent, consumers should know when they are interacting with an AI system“.

One effective approach to mitigating the dangers of misinformation and disinformation created by AI could be the implementation of required distinct visual indicators, such as an AI style crawl, to further divide the indistinguishable nature of generative AI.

Conclusion

While this won’t fix all the problems around determining what content is generated by AI and what content was, at least in theory, published by a human, it starts to address the issue of how companies integrate generative AI into products we already use without generative AI integration, e.g. Google search and Microsoft products. While we are currently riding the hype train around generative AI, the trend will no doubt lose its novelty. It will soon just exist. In everything we do. As librarians and information professionals, it will be our responsibility to arm students, staff and faculty with a skillset needed to navigate this increasingly machine-generated world.