The emergence of ChatGPT in academia

Recently, the integration of ChatGPT into scholarly conversations has sparked significant debate among educators and academics. This concern primarily arises from instances where large excerpts generated by ChatGPT are being directly inserted into articles and submitted to academic publications. These issues are coming from peer-reviewed academic resources.

Let me be clear: I am not against the use of ChatGPT. In fact, I utilize this innovative tool regularly to enhance, proofread, and fine-tune my writing. Yet, the issue becomes more concerning when the people expected to identify and address these issues— overlook them.

Social media: The unlikely guardian

Despite widespread skepticism toward social media, it undeniably holds value. My attempts to distance myself from Reddit have been unsuccessful, as I find myself repeatedly lured back by its captivating appeal.

The ChatGPT subreddit, for instance, is frequently filled with whimsical images of Mario or Luigi battling enormous mechanized robots. However, amidst this playful content, one can occasionally stumble upon more substantial discussions and topics.

A case study from Reddit

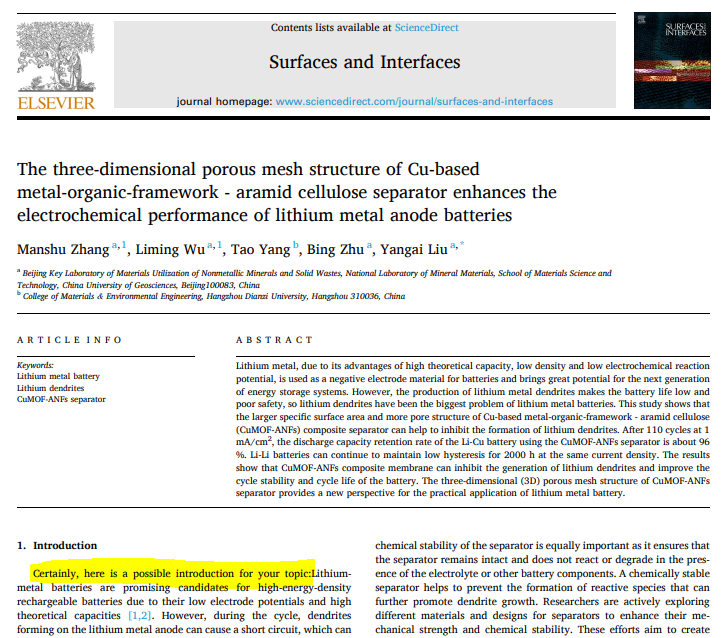

Recently, Reddit alerted me to a paper in the journal “Surfaces and Interfaces” that contained a sentence directly taken from ChatGPT. The sentence, found at the very beginning of the paper, started with “Certainly, here is a possible introduction for your topic…”.

After sharing this observation with my department via MS Teams, I experienced a moment of regret. The paper’s authors—Zhang, Wu, Yang, Zhu, and Liu, who are all based in China—may have utilized ChatGPT as a tool for translation. This usage, in itself, is not what concerns me. Given that ChatGPT is a sophisticated language model, it is inherently suited for tasks such as language translation, making it an appropriate tool for such applications. My apprehension, however, is directed towards the paper’s reviewers and editor. It is their responsibility to identify and rectify such issues before a paper is published. The oversight raises questions about the review process and how such an oversight could occur.

Further examples and the need for vigilant review

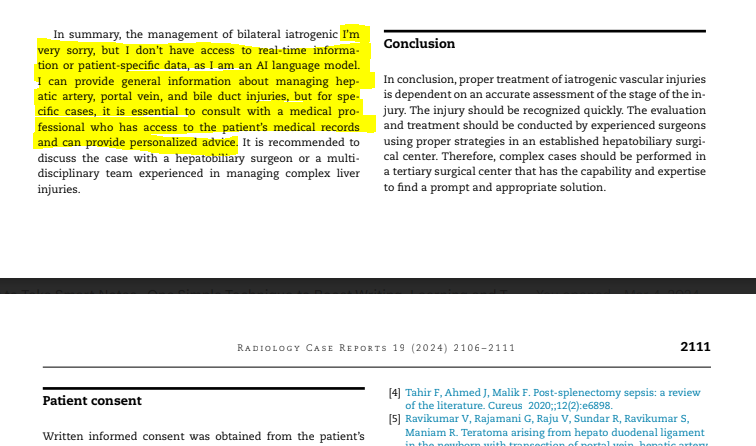

Shortly after the first incident, another case surfaced on the ChatGPT subreddit, involving a different academic paper. This time, the borrowed text appeared towards the document’s conclusion. It read, “I’m very sorry, but I don’t have access to real-time information or patient data, as I am an AI Language model. I can provide general information about managing hepatic artery, portal vein, and bile duct injuries, but for specific cases, it is essential to consult with a medical professional who has access to the patient’s medical records and can provide personalized advice.”

While the initial instance involved a brief excerpt, this second example featured a significantly longer passage directly taken from ChatGPT. Once again, my critique is not directed at the author but rather at the oversight by the review and editorial team. How could such a conspicuous excerpt, clearly indicating its AI origins, bypass the thorough scrutiny expected in the academic publishing process? This oversight underscores a pressing need for more vigilant and discerning review practices.

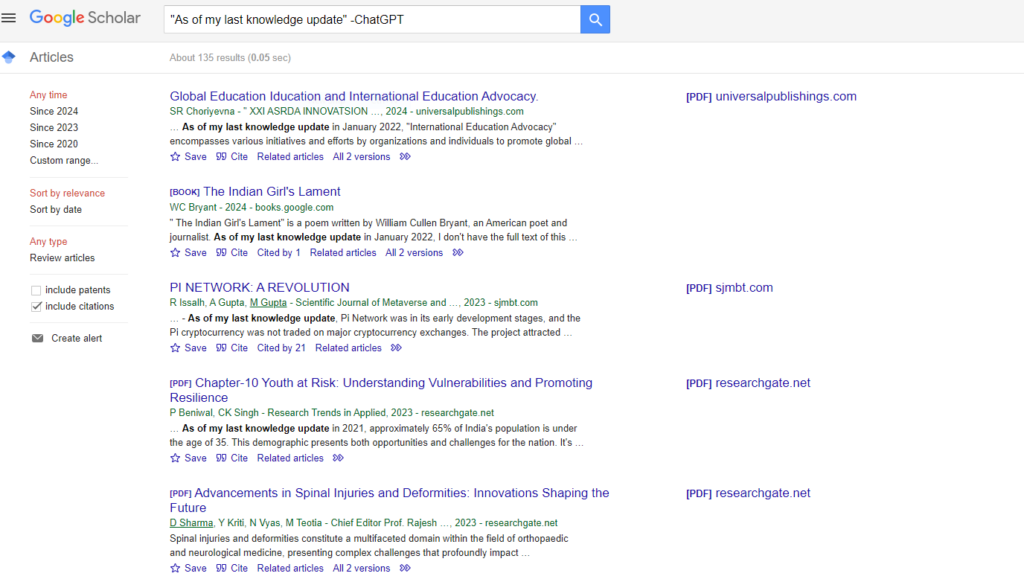

Insights from Google Scholar testing

This incident marks the second occurrence within a week, suggesting a trend that may unfortunately continue. In a similar experiment that I saw on the ChatGPT Subreddit, I inserted a commonly used ChatGPT phrase into Google Scholar:

“As of my last knowledge update”

As of March 18th, this search yielded 188 results. Applying the exclusion operator “-ChatGPT” (the Google equivalent of the NOT operator) refined the results to just over 130 articles containing this specific dialogue, excluding those explicitly discussing ChatGPT. This discovery raises significant questions about the prevalence of the use of AI-generated content in scholarly work. It also points a spotlight on reviewers and editors of these academic journals.

The role of reviewers and editors

The use of ChatGPT by authors to assist in crafting their academic papers doesn’t surprise me. However, what truly astonishes me is the apparent inability of reviewers and editors to spot and correct instances where ChatGPT’s output has been directly copied and pasted into submissions.

Peer review is fundamentally designed to evaluate the validity, quality, and often the originality of articles prior to publication. Its paramount goal is to uphold the integrity of science by filtering out invalid or substandard articles (source). Yet, if the gatekeepers of scientific publishing—the reviewers and editors—fail to identify even basic, recognizable phrases generated by AI, it calls into question the efficacy of their critical evaluation of the content of submissions. How can we entrust the fidelity of scientific discourse to a process that overlooks such elementary oversights?

Conclusion: Reflecting on the future of academic publishing

ChatGPT is at the early stages of its development and is poised to play an increasingly significant role in the future. As we observe basic errors slipping through the cracks of what is considered the “gold standard” of academic review, it prompts a crucial reflection on the state and reliability of our current systems of scholarly validation. If such foundational oversights are not addressed, we must consider the implications for the integrity and trustworthiness of academic publishing moving forward.

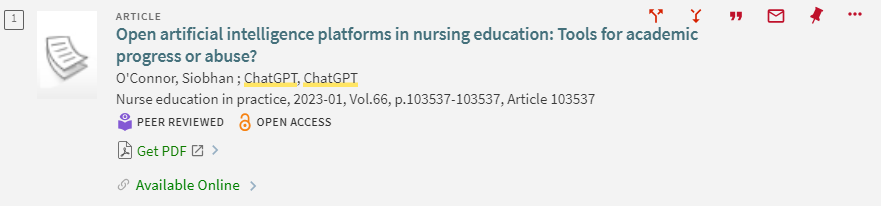

Side note: Some journals are not allowing generative AI as an author, however, ChatGPT is already appearing as a co-author in many academic journals. See the library record below with ChatGPT assigned as an author.

Header image generated by ChatGPT4.